Background

I love color. In fact, one of my first posts I ever wrote for my developer website was based on setting up color printing for console output (which is super useful for distinguishing errors from normal informational output).

In my current code base, I have a whole library dedicated to Color. In this article, I’d like to share some code and insights for a few constructs I find really useful in everyday development.

RGB Color

Let’s start with the basics. Color32 is a simple 32-bit color structure with four 8-bit unsigned integer components: red (R), green (G), blue (B), alpha (A). Each of the components range from [0,255].

The class only has a small set of member functions for clamping, linear interpolation between two colors, etc. Also, a Color32 can be specified from four floats (range: [0,1]), though the component values are immediately converted to the byte representation.

Color-Space Conversions

RGB is so ubiquitous because it’s the color-space that rendering API calls expect for their color inputs. However, converting from RGB to other color-spaces (and back) is very important because some color operations are more natural in other color-spaces.

For the purpose of this article, we will concentrate on Hue-Saturation-Value (HSV).

HSV Color

HsvColor contains four float components: hue (H), saturation (S), value (v), alpha (A). Each of the components range from [0,1].

It is important to note that the HSV color-space is actually a cylinder, and the hue component is actually an angular value. I like to keep hue in the same [0,1] range as the other components, but that’s just a personal preference. Just be sure to convert your hue appropriately when implementing your RgbToHsv() and HsvToRgb() routines.

Furthermore, don’t be troubled that this object is a bit more heavyweight than the Color32. The main purpose for the HsvColor is to use it as a vehicle for color computation as opposed to storage.

Color Generator

Generating a good sequence of unique colors is not as trivial as it sounds. Using random number generators produces poor color sequences, and I didn’t want to limit myself to a hand-picked list of “good” colors. Instead I wanted to be able to generate a list of colors for an arbitrary size depending upon the requirements of the code. As a result, I found a couple of different articles on the subject (see References below) and implemented my own lightweight ColorGenerator class.

Internally, it uses the HSV color-space to generate a new unique color each time Generate() is called. The saturation and value components are kept constant during generation, while the hue is rotated around the HSV color cylinder using the golden ratio.

const float cInvGoldenRatio = 0.618033988749895f;

The Generate() routine below uses the cInvGoldenRatio constant as the hue increment.

Color32 Generate() const

{

const float nextHue = mHsvColor.H + cInvGoldenRatio;

mHsvColor.H = MathCore::Wrap(nextHue, 0.0f, 1.0f);

return ColorOps::HsvToRgb(mHsvColor);

}

It’s important to note that the colors are not pre-generated and stored. Instead, I simply have a single HsvColor that stores the state of the generator between calls to Generate().

Color Palette

The ColorPalette class is a named container of colors. The array of colors is set on construction, and each individual color can be accessed via the bracket operator.

I call it a “palette” because it stores a fixed array of colors that are not assumed to be associated with each other in any particular way (order doesn’t matter, etc.). Although this class seems trivial, it serves as a basis for a couple really cool constructs.

Prefab Color Palettes

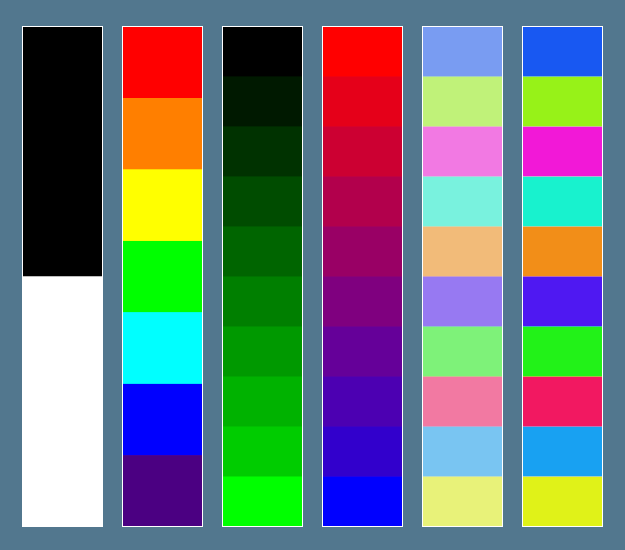

As a convenience, I have a set of ColorPalette creation routines (listed and respectively displayed in the image below).

- Black and White.

- Rainbow: Red, Orange, Yellow, Green, Cyan, Blue, Violet.

- Monochrome: single color spectrum (more precisely: a spectrum from black to a single color).

- Spectrum: a set of bands between two colors.

- Pastels: a generated set of pastel colors. (h:0.0f, s:0.5f, v:0.95f)

- Bolds: a generated set of saturated colors. (h:0.0f, s:0.9f, v:0.95f)

Color Ring

A ColorRing is a simple wrapper class that operates on a given ColorPalette, treating it like a circular list. Each time client code calls GetColor(), the current color is returned and the current color index is increased (wrapping back to 0 if it passes the last color).

This class useful when you have a group of items but want to limit their color choices to a certain set of colors (which may or may not be equal to the number of items): build a palette with your desired set of colors and then use a ColorRing when performing the color assignment.

Color Ramp

The ColorRamp class also operates on a given ColorPalette. It treats the ColorPalette as its own color subspace that can be accessed via a parametric value in the range of [0,1]. In other words, when client code wants a color from the ColorRamp, they must provide a parametric value which is then mapped into the ColorPalette.

Additionally, my implementation includes a RampType flag that indicates whether it will behave as a gradient or whether it will round (up or down) to the nearest neighboring color in the palette.

The Evaluate() routine uses the given parametric value to find the two nearest colors, and then computes a resulting color based on the aforementioned RampType flag. In the case of the gradient, the colors are linearly interpolated with the parametric value remainder; while the other two behaviors simply round to one color or the other based on the parametric value remainder.

Color32 Evaluate(const float parametricValue) const

{

if (parametricValue <= 0.0f)

return mColorPalette[0];

if (parametricValue >= 1.0f)

return mColorPalette[mColorPalette.Count-1];

const float cValue = parametricValue * (mColorPalette.Count - 1);

const int idxA = (int)MathCore::Floorf(cValue);

const int idxB = idxA + 1;

switch (mRampType)

{

case RampTypeId::eGradient:

{

const float fracBetween = cValue - (float)idxA;

const Color32& colorA = mColorPalette[idxA];

const Color32& colorB = mColorPalette[idxB];

return Color32::Lerp(colorA, colorB, fracBetween);

}

case RampTypeId::eRoundDown:

return mColorPalette[idxA];

case RampTypeId::eRoundUp:

return mColorPalette[idxB];

default:

PULSAR_ASSERT(false);

break;

}

// should never get here

return Color32::eBlack;

}

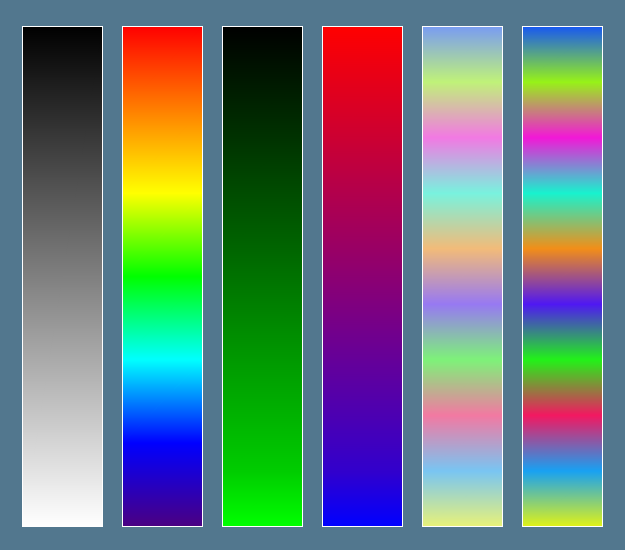

Here’s what it looks like when we create a corresponding gradient ColorRamp for each of the palettes shown above:

Initially, I wrote the ColorRamp class to help with assigning color values to vertices in a height field, but since then I have found several other interesting applications for it.

Final Thoughts

While I concentrated on exhibiting a few of the core classes in my Color library at a high level, it should be pretty clear that there are a lot of possibilities when it comes to Color. You can find more in-depth information, gritty details, and sample code in the references provided below. I highly encourage you to read them even if you’re only the slightest bit interested — they are well worth your time.

References

- Ankerl, Martin. How to Generate Random Colors Programmatically

- devmag.org.za. How to Choose Colours Procedurally (Algorithms)

- Unreal Wiki. HSV-RGB Conversion

- Wikipedia. HSL and HSV